Building an AI Agent

Building an AI Agent

Enterprises face significant challenges in making supply chain decisions that maximize profits while adapting quickly to dynamic changes. Optimal supply chain operations rely on advanced analytics and real-time data processing to adapt to rapidly changing conditions and make informed decisions.

Optimization is everywhere. To meet customer commitments and minimize risks, organizations run thousands of what-if scenarios.

For instance, retail companies must consider factors such as traffic, weather, and miles driven to optimize routes, and logistics to reduce shipping costs, more than half of which is attributed to last-mile delivery.

Similarly, pharmaceutical companies must navigate complex supply chains, regulatory constraints, and high research and development costs, making real-time optimization difficult.

Financial institutions must balance risk and return in volatile markets, necessitating sophisticated mathematical models (PDF) for decision-making.

The agriculture sector deals with unpredictable factors, such as weather and market demand, complicating cost and profit optimization.

In this post, we demonstrate how linear programming and LLMs, with the NVIDIA cuOpt microservice for optimization AI, can help you overcome optimization challenges to push the boundaries of optimization with an unprecedented solution.

Linear programming

With NVIDIA cuOpt and NVIDIA NIM inference microservices, you can harness the power of AI agents to improve optimization, with supply chain efficiency being one of the most compelling and popular domains for such applications. In addition to the well-known vehicle routing problem (VRP), cuOpt can optimize for linearly constrained problems on the GPU, expanding the set of problems that cuOpt can solve in near-real time.

The cuOpt AI agent is built using multiple LLM agents and acts as a natural language front end to cuOpt, enabling you to transform natural language queries to code and to an optimized plan seamlessly.

Large language models (LLMs) have shown promising results for chatbots, coding assistants, and even optimizers capable of solving small traveling salesperson problems (TSP). As good as LLMs are at providing intelligent responses given some context, the combinatorial space of question answering and planning for large-scale operations has been out of reach for today’s LLMs.

The cuOpt AI agent is specialized in using in-context learning with NVIDIA NIM for llama3-70b for reasoning tasks and NVIDIA NIM for codestral-22b-instruct-v0.1 for coding tasks. Our pipeline splits the original prompt into intermediate tasks. Each of these tasks is processed by specialized agents that output fine-grained units of data and code fed in turn to other agents for subsequent tasks. This architecture enables you to achieve high-performance accuracy since the LLMs are given specific sub-queries that can easily be parallelized to maximize responsiveness.

A flexible and parallel architecture enables the model to stay constant in inference time as the data scales to millions of constraints and variables. However, that would not mean anything without a fast linear programming solver.

The cuOpt linear programming solver can solve problems of this magnitude in seconds so you can dedicate most of the total time to inference. It is fully CUDA-accelerated and has been optimized with CUDA math libraries, along with many other end-to-end optimizations.

Given a user problem and an optimization model, you can process a set of possible scenarios that users would like to test during their decision-making process, instead of waiting for the next day or manually setting up these experiments daily.

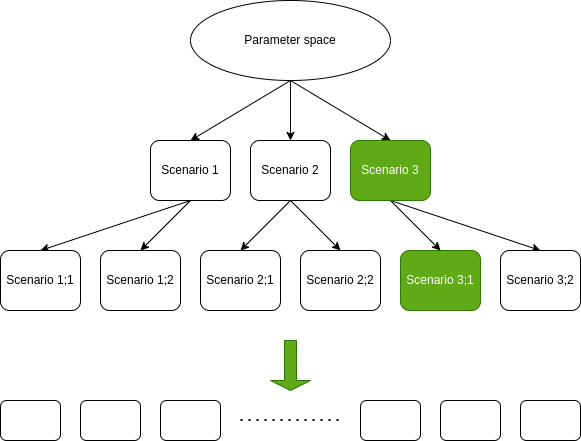

The cuOpt AI agent can understand natural language descriptions of business use cases through its integration with LLM NIM and run what-if scenario prompts to return a detailed answer showing how it came up with the results. This process represents a tree of possibilities accounting for disruptions, events, and parameter changes (Figure 1).

Enterprises face significant challenges in making supply chain decisions that maximize profits while adapting quickly to dynamic changes. Optimal supply chain operations rely on advanced analytics and real-time data processing to adapt to rapidly changing conditions and make informed decisions.

Optimization is everywhere. To meet customer commitments and minimize risks, organizations run thousands of what-if scenarios.

For instance, retail companies must consider factors such as traffic, weather, and miles driven to optimize routes, and logistics to reduce shipping costs, more than half of which is attributed to last-mile delivery.

Similarly, pharmaceutical companies must navigate complex supply chains, regulatory constraints, and high research and development costs, making real-time optimization difficult.

Financial institutions must balance risk and return in volatile markets, necessitating sophisticated mathematical models (PDF) for decision-making.

The agriculture sector deals with unpredictable factors, such as weather and market demand, complicating cost and profit optimization.

In this post, we demonstrate how linear programming and LLMs, with the NVIDIA cuOpt microservice for optimization AI, can help you overcome optimization challenges to push the boundaries of optimization with an unprecedented solution.

Linear programming

With NVIDIA cuOpt and NVIDIA NIM inference microservices, you can harness the power of AI agents to improve optimization, with supply chain efficiency being one of the most compelling and popular domains for such applications. In addition to the well-known vehicle routing problem (VRP), cuOpt can optimize for linearly constrained problems on the GPU, expanding the set of problems that cuOpt can solve in near-real time.

The cuOpt AI agent is built using multiple LLM agents and acts as a natural language front end to cuOpt, enabling you to transform natural language queries to code and to an optimized plan seamlessly.

Large language models (LLMs) have shown promising results for chatbots, coding assistants, and even optimizers capable of solving small traveling salesperson problems (TSP). As good as LLMs are at providing intelligent responses given some context, the combinatorial space of question answering and planning for large-scale operations has been out of reach for today’s LLMs.

The cuOpt AI agent is specialized in using in-context learning with NVIDIA NIM for llama3-70b for reasoning tasks and NVIDIA NIM for codestral-22b-instruct-v0.1 for coding tasks. Our pipeline splits the original prompt into intermediate tasks. Each of these tasks is processed by specialized agents that output fine-grained units of data and code fed in turn to other agents for subsequent tasks. This architecture enables you to achieve high-performance accuracy since the LLMs are given specific sub-queries that can easily be parallelized to maximize responsiveness.

A flexible and parallel architecture enables the model to stay constant in inference time as the data scales to millions of constraints and variables. However, that would not mean anything without a fast linear programming solver.

The cuOpt linear programming solver can solve problems of this magnitude in seconds so you can dedicate most of the total time to inference. It is fully CUDA-accelerated and has been optimized with CUDA math libraries, along with many other end-to-end optimizations.

Given a user problem and an optimization model, you can process a set of possible scenarios that users would like to test during their decision-making process, instead of waiting for the next day or manually setting up these experiments daily.

The cuOpt AI agent can understand natural language descriptions of business use cases through its integration with LLM NIM and run what-if scenario prompts to return a detailed answer showing how it came up with the results. This process represents a tree of possibilities accounting for disruptions, events, and parameter changes (Figure 1).

Organizations can overcome the complexities inherent in large-scale operations and achieve the remarkable feat of establishing AI-driven factories at an extraordinary scale. This is made possible through the innovative integration of an AI planner.

The AI planner is an LLM-powered agent built on NVIDIA NIM, which is a set of accelerated inference microservices. A NIM is a container with pretrained models and CUDA acceleration libraries that is easy to download, deploy, and operate on-premises or in the cloud.

The AI planner is built with the following:

- An LLM NIM to understand planners’ intentions and direct the other models

- A NeMo Retriever RAG NIM to connect the LLM to proprietary data and a cuOpt NIM for logistics optimization

- Manager: Dispatches each sub-task to specialized agents.

- Modeler: Specializes in modifying the current modeling of the problem to integrate the assigned sub-task.

- Coder: Writes code to solve one specific task prompted by one agent.

- Interpreter: Focuses on analyzing and comparing results before and after the new plan.

The AI planner, built using LangGraph, is composed of multiple specialized agents and a set of tools. Each agent is an LLM NIM that handles some non-overlapping tasks and can call some tools when necessary.

The solution offers end-to-end visibility, demand forecasting methods, auto-fulfillment optimization, dynamic planning optimization, and integrated business planning in near-real time.

NVIDIA operates one of the largest and most complex supply chains in the world. The supercomputers we build connect tens of thousands of NVIDIA GPUs with hundreds of miles of high-speed optical cables.

This undertaking relies on the seamless collaboration of hundreds of partners, who deliver thousands of distinct components to a dozen factories, enabling the production of nearly 3K different products. A single disruption to our supply chain can affect our ability to meet our commitments.

To help us manufacture and deliver AI factories at an extraordinary scale, the NVIDIA business operations team expanded the use case of an AI planner that lets us chat with our supply chain data. With cuOpt as the optimization brain behind our AI planner, the NVIDIA operations team can analyze thousands of possible scenarios in real time using natural language inputs to talk to our supply chain data. Our cuOpt-powered AI planner can do this analysis in just seconds, enabling the speed and agility to respond to an ever-shifting supply chain.

These advanced technologies work in tandem, enabling your organizations to navigate the intricate web of supply chain management with unprecedented efficiency and precision.

Optimized decision-making

With the dramatic improvement in solver time, linear programming enables significantly faster decision-making, which can be applied to numerous use cases across various industries, including but not limited to the following:

- Resource allocation

- Cost optimization

- Scheduling

- Inventory planning

- Facility location planning

- Supply chain networks such as warehouses, stores, vendors, or supplier placement

- Transportation hubs

- Public spaces such as hospitals, bus stops, fire stations for emergency services, cell towers for telcos, and so on

Source: https://developer.nvidia.com/blog/building-an-ai-agent-for-supply-chain-optimization-with-nvidia-nim-and-cuopt/?ncid=so-link-348678&linkId=100000275760095&fbclid=IwY2xjawEQWZJleHRuA2FlbQIxMQABHVlOh9YbIa4V28efIHYQlZujW1QWaVuGh7ZWpUGpCQ2iakbB354j5HYibg_aem_qq7x_KzqEvJkdq9i5kq-Eg